Commentary

Microsoft Takes On Deepfakes

- by Laurie Sullivan , Staff Writer @lauriesullivan, September 3, 2020

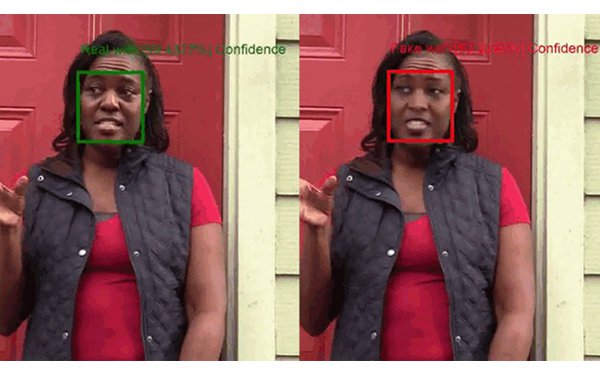

Microsoft has developed technology to detect deepfakes that it calls the Video Authenticator, which can analyze a still photo or video to provide a percentage or the confidence score that the media is artificially manipulated. It analyzes the blends of subtle fading or greyscale in the image that might not be detectable by the human eye.

This technology was originally developed by Microsoft Research in coordination with Microsoft’s Responsible AI team and the Microsoft AI, Ethics and Effects in Engineering and Research (AETHER) Committee, which is an advisory board at Microsoft that helps to ensure that new technology is developed and fielded in a responsible manner.

Video Authenticator was created using a public dataset from Face Forensic++ and was tested on the DeepFake Detection Challenge Dataset, both models for training and testing deepfake detection technologies.

advertisement

advertisement

The other technology can detect manipulated content and assure people the media they are viewing is authentic, and has two components. The first technology is a tool built into Microsoft Azure that enables a content producer to add digital hashes and certificates to a piece of content.

The digital hashes and certificates then live with the content as metadata wherever it travels online.

The second technology is a reader that can exist as a browser extension or in other forms. It checks the certificates and matches the digital hashes, letting people know with a high degree of accuracy that the content is authentic and that it hasn’t been changed, as well as providing details about who produced it.

Disinformation is not new, but marketers might not know that Microsoft has been involved with research that cataloged 96 foreign influence campaigns targeting 30 countries between 2013 and 2019 with disinformation on social media platforms attempting to defame notable people, persuade the public or polarize debates.

While 26% of these campaigns targeted the U.S., other countries targeted include Armenia, Australia, Brazil, Canada, France, Germany, the Netherlands, Poland, Saudi Arabia, South Africa, Taiwan, Ukraine, the United Kingdom and Yemen.

About 93% included the creation of original content, while 86% amplified pre-existing content and 74% distorted objectively verifiable facts.

The data is part of research supported from Jacob Shapiro at Princeton University by Microsoft and updated this month.

Microsoft has been working on two technologies to address different parts of the problem of disinformation as part of the company’s Defending Democracy Program, which, in addition to fighting disinformation, helps to protect voting through ElectionGuard and helps secure campaigns and others involved in the democratic process through AccountGuard, Microsoft 365 for Campaigns, and Election Security Advisors.